Python Training, Mentoring and Development Services

Python Training and Mentoring for you or your team

As an experienced Python trainer I can:

- Deliver your Python training materials

- Create new training materials

- Create and deliver bespoke Python training for your team or organisation

- Perform code reviews and mentor your staff

See below for examples of how I have helped others become better Python developers.

Python Software Development for your organisation

I enjoy the elegance of the core Python language, am slowly learning the standard library, and work with most common Python packages such as NumPy, pandas, Matplotlib, Django, Flask and TensorFlow.

I follow the PEP8 coding standard, strongly believe in meaningful variable names, and try to keep my code as simple as possible.

I can write faster Python code using benchmarking, multi processing, multi threading and async/await. This typically makes the code more complex so should be done sparingly.

Python Training and Mentoring

I love finding new ways to help people understand how Python works. It is such an elegant language. Whether I’m working one-to-one as a mentor, training groups face to face or remotely or creating training materials, I always learn something new. Here are some of the things I’ve worked on recently.

From time to time I have the pleasure to mentor fellow Python developers. I enjoy sharing my experiences and helping others to keep moving and …

Learning Tree International is a long-established and well-respected international training company. I have been delivering Python courses for Learning Tree since 2019, face to face …

Springer Science and Business Media Company, through their APress publishing arm, originally approached me about writing a book for them. I suggested creating a training …

Python Software Development

Python is a very expressive language. As Python developers we have access to 100,000s of libraries, saving a lot of coding effort. It may not …

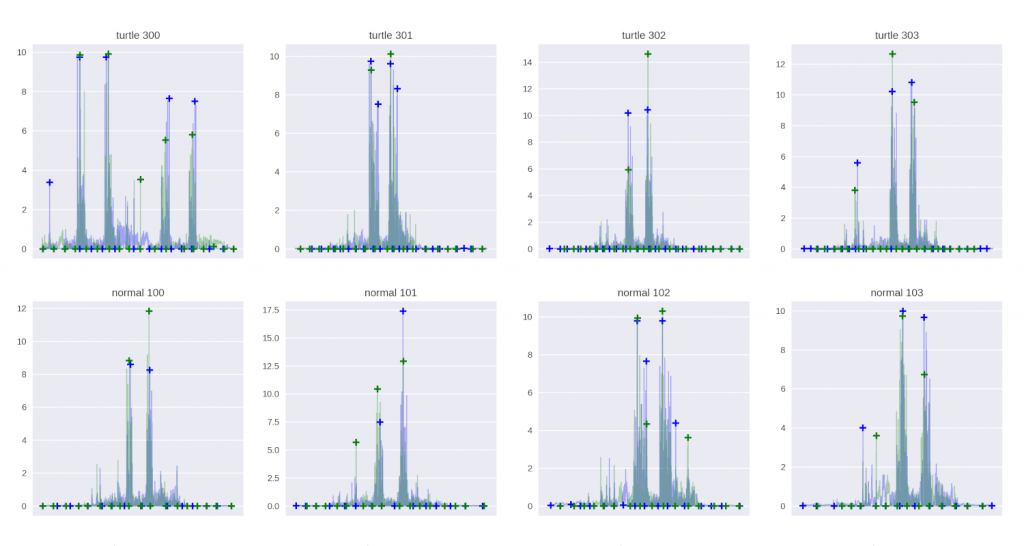

This was a very interesting project. The client had a ‘multi objective evolutionary algorithm’ implemented in Python. It tries to find an optimal solution to …

You might think that moving some data around from A to B should be simple. Unfortunately it is not that straight forward. Having done a …

Python is a very popular tool for data extraction, clean up, analysis and visualisation. I’ve recently done some work in this area, and would love …

My client, a start up with a lot of experience in their field, had identified an important gap in the market. Large sums of money …

The client, a highly respected training company, was using an in-house developed system to manage the courses, bookings, delegates, and sales and marketing processes. When …